Batch request helps to reduce network overhead and improve performance.

Let’s understand scenario for implementation

We have Angular/React/UI application, and we have requirement to implement a functionality to download multiple data from multiple API. Here application have huge number of users who do this activity so we will learn how we will make http calls in batch to get better performance.

Batch Requests Benefits

- Reduces network overhead — Fewer HTTP requests means less latency.

- Optimizes performance — The server can process multiple requests at once.

- Minimizes round trips — Reduces load on both the client and server.

- Improves efficiency — Especially useful for microservices or API gateways.

Real time Use Case.

We have screen and user selecting multiple records to download/execute.

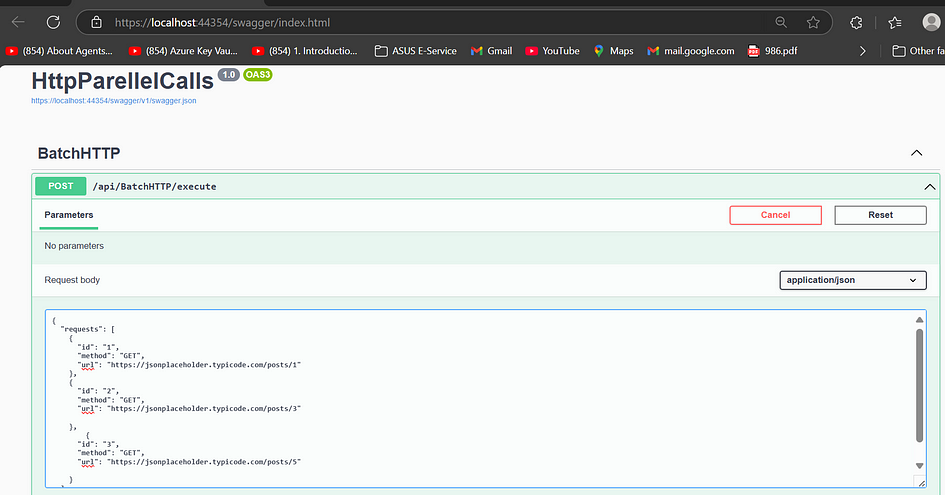

Consider below JSON we will be sending to server to execute.

Here we will be clubbing all calls in one JSON file like below and will execute in parallel. Making http calls in parallel will increase user experience.

{

"requests": [

{

"id": "1",

"method": "GET",

"url": "https://jsonplaceholder.typicode.com/posts/1"

},

{

"id": "2",

"method": "GET",

"url": "https://jsonplaceholder.typicode.com/posts/3"

},

{

"id": "3",

"method": "GET",

"url": "https://jsonplaceholder.typicode.com/posts/5"

}

]Cc}C# code for parsing above INPUT JSON

public class HTTPBatchRequest

{

public List<HTTPDataRequest> Requests { get; set; }

}

public class HTTPDataRequest

{

public string Id { get; set; }

public string Method { get; set; } // GET, POST, PUT, DELETE

public string Url { get; set; }

}Response Class: we will parse response from api’s on this object

public class HTTPBatchResponse

{

public string Id { get; set; }

public int StatusCode { get; set; }

public object Data { get; set; }

}Controller Code

[ApiController]

[Route("api/[controller]")]

public class BatchHTTPController : ControllerBase

{

private static readonly HttpClient _httpClient = new HttpClient();

public BatchHTTPController()

{

}

[HttpPost("execute")]

public async Task<IActionResult> ExecuteBatch([FromBody] HTTPBatchRequest batchRequest)

{

if (batchRequest?.Requests == null || !batchRequest.Requests.Any())

{

return BadRequest("No batch requests provided.");

}

var tasks = batchRequest.Requests.Select(async request =>

{

HttpResponseMessage response;

if (request.Method.Equals("GET", StringComparison.OrdinalIgnoreCase))

{

response = await _httpClient.GetAsync(request.Url);

}

else

{

return new HTTPBatchResponse

{

Id = request.Id,

StatusCode = 400,

Data = "Unsupported HTTP Method"

};

}

var data = await response.Content.ReadAsStringAsync();

return new HTTPBatchResponse

{

Id = request.Id,

StatusCode = (int)response.StatusCode,

Data = data

};

});

var results = await Task.WhenAll(tasks);

return Ok(results);

}

}Explanation

a. We will be posting JSON File have urls to execute along with Method Type

b. We have parsed JSON file in .net core API side and making http calls in parellel.

c. In the batch processing code, this line is responsible for making multiple HTTP calls concurrently:

var tasks = batchRequest.Requests.Select(async request => { ... });

var results = await Task.WhenAll(tasks);Here Collection of Tasks Is Created (Without Execution)

d. The .Select() method is not a traditional foreach loop. Instead, it creates a collection of tasks (without executing them immediately).

Each task represents an individual HTTP call, and Task.WhenAll(tasks) ensures that all tasks run in parallel.

e. Task.WhenAll(tasks) Executes All Calls in Parallel

Task.WhenAll(tasks) starts all HTTP requests in parallel.

It waits until all tasks are completed.

This prevents sequential execution (which would be slow).

Code Execution

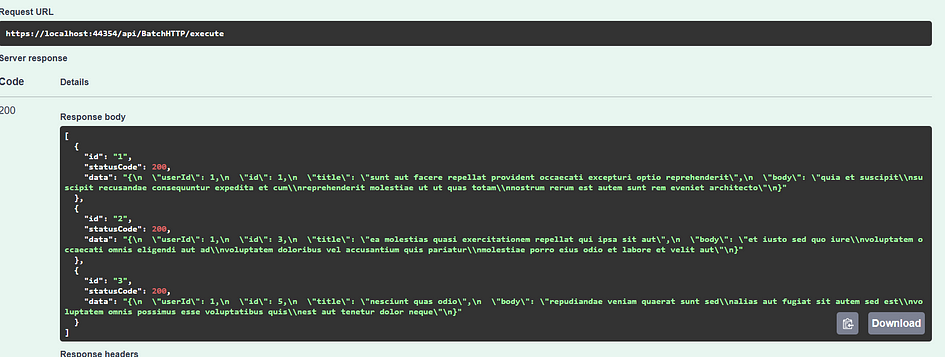

Output

We can see data for all 3 api’s we get here via making only one http call to server (.net core api server.)

Best Practice while making HTTPCalls from backend

a. Bad Practice: Sequential HTTP Calls (Slower)

foreach (var request in batchRequest.Requests)

{

var response = await _httpClient.GetAsync(request.Url); // Waits for each request sequentially

}here we are making http calls in loop which will create n number of HTTPClient objects at server and then server will allocate more memory to object which will lead performance issues.

Solution

var tasks = batchRequest.Requests.Select(async request =>

{

return await _httpClient.GetAsync(request.Url);

});

var responses = await Task.WhenAll(tasks);- Solution: All HTTP requests are triggered simultaneously.

- Faster Execution: No waiting for the previous request before sending the next one.

B. Limit Concurrent Requests (Avoid Overloading)

Suppose we have 100 of url’s in above JSON file then this will again create some problem because in this case server need to handle 100 of reuqest in parallel so we can say server will become overload we have to avoid those situations using

If too many requests are made at once, the server might reject some due to rate limiting.

Use SemaphoreSlim to control concurrency:

private static readonly SemaphoreSlim _semaphore = new(5); // Limit to 5 concurrent calls

var tasks = batchRequest.Requests.Select(async request =>

{

await _semaphore.WaitAsync();

try

{

return await _httpClient.GetAsync(request.Url);

}

finally

{

_semaphore.Release();

}

});

var responses = await Task.WhenAll(tasks);C. Use caching for Similar requests

If the same API call is frequently made, cache the response instead of calling the API again.

if (!_cache.TryGetValue(request.Url, out string cachedData))

{

var response = await _httpClient.GetAsync(request.Url);

cachedData = await response.Content.ReadAsStringAsync();

_cache.Set(request.Url, cachedData, TimeSpan.FromMinutes(10));

}D. Implement Fallback Pattern

This pattern ensures that alternative response will reach to client even in case of failing primary API.

Fallback pattern is a resilience design pattern

The Fallback pattern is a resilience design pattern used to provide an alternative or backup solution when the primary service or component fails. It helps ensure that the application continues to function and provide some level of service, even if certain parts are not working as expected.

It ensures service continuity, improves user experience, and provides significant business benefits by reducing the impact of failures.

Fallback Pattern in .NET Core: Handling Service Failures Gracefully | by .Net Labs | Stackademic

E. HTTP Client Best Practice

We use HTTP Client widely in application to make connections to another external API to get data from c# code.

But it is required to see what impact is if we use this httpclient in bad way. There will be performance issues in application if we used in below way.

HttpClient best practice in .Net core | by .Net Labs | Stackademic

Conclusion

We have understood how to make HTTP calls in parallel using batch and its benefits

Thanks

#coding, #coder, #httpclient, #httprequest, #performance , #api, #development , #software, #programming , #programmer, #dotnet

Happy Coding

Comments

Post a Comment